It’s Monday morning and I’ve just caught the 8.52am bus into the city, which I do every day for work. I show my travel card to the usual driver who nods, we don’t speak and I make my way to my usual seat, third row back, facing forward, on the left. I acknowledge one or two of the many faces I see every day, but we don’t speak. Familiar strangers. I get my phone out, put my earphones in, put my playlist on, put my head down and start to disappear into my social media, my emails, my photos, my bubble. I’m immersed, but become aware that the bus has stopped and people are starting to look up and around, no-body seems to know what is happening, but nobody speaks. I notice several people on CityCycles go past, something I’d quite like to try if I knew where or how to hire them. Finally the bus continues and people return silently to their phones. Despite rarely looking up, after making this journey twice a day for 3 years I instinctively know my stop is next, outside the Concert Hall. I often wonder what shows they have on and still keep meaning to find out, but never seem to find the time. I leave the bus, stopping to buy a coffee in the usual place, before walking the last 10 minutes along my usual route down the high street.

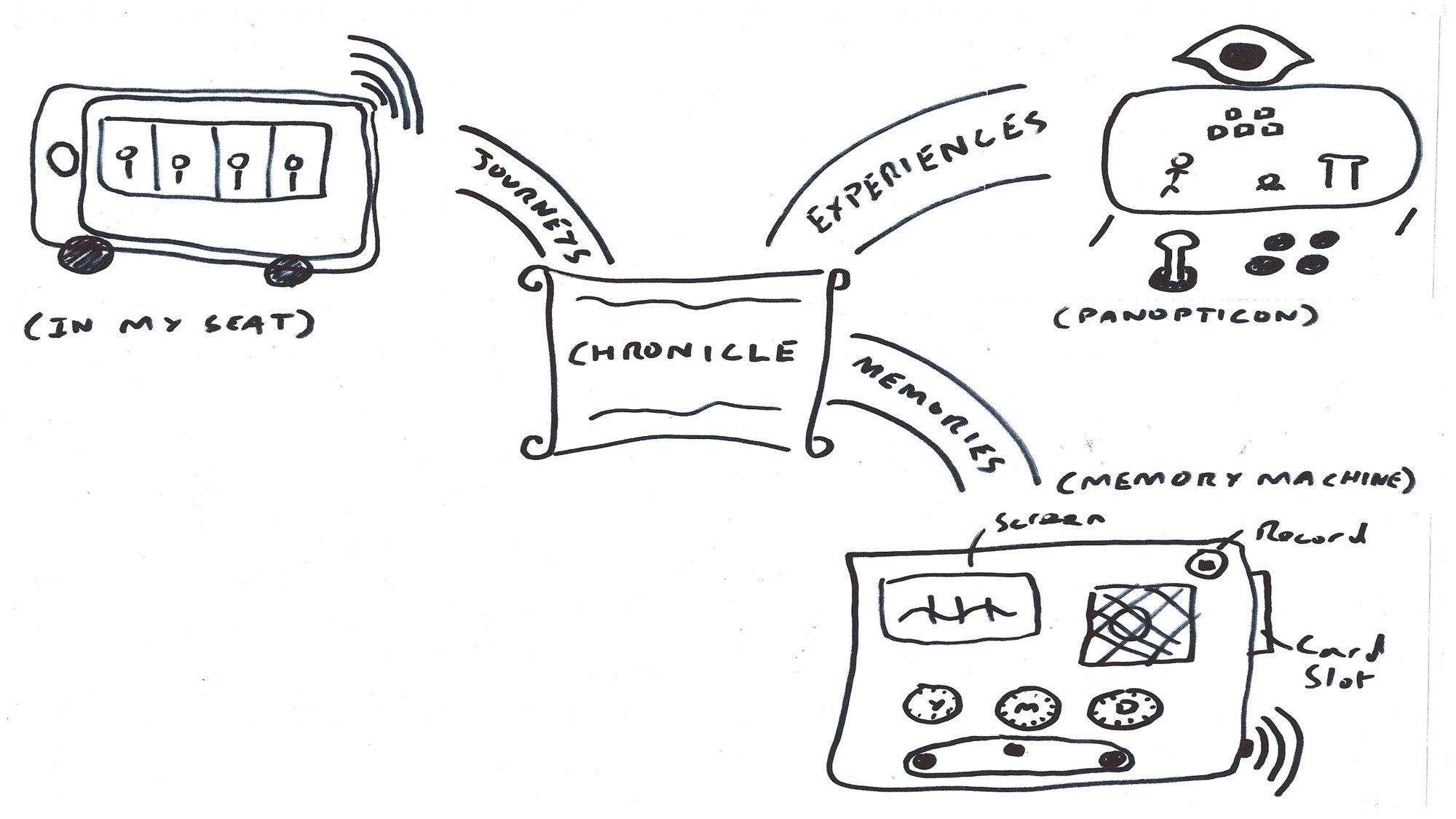

Unfortunately, not only can such everyday journeys on public transport take a notable proportion of our day, they can often be monotonous, isolating and largely unfulfilling experiences. What if we could address this situation by offering (bus) passengers ‘dynamic’ and additionally more enjoyable, engaging and relevant digital content, both enriching potential connections within the passenger ‘community’ on-board and also enabling connections to be made with the external environment, en route. The ‘In my Seat’ projects aims to do this by offering pertinent, personalised passenger-driven content, both stakeholder delivered and user-generated, which can be accessed via a mobile app, linked directly to individual sensors in a passenger’s seat / vehicle.

For the passenger, such rich and context specific content may include a ‘today’s fun fact’ or joke left by a previous passenger, or an on-going, on-board bus game. More practically it may offer real-time notifications of potential delays, information on complimentary, sustainable transport e.g. city bike hire, or alternatively upcoming shows at a local theatre, or lunch time deals at cafes along the route. For the public transport operators and city councils, being able to identify when, where and how many people are using particular public transport modes e.g. buses, is invaluable in being able to ‘evidence’ need and demand and thereby align services and supporting infrastructure effectively.

With this in mind, the project will shortly run a series of stakeholder engagement and also user (passenger) design workshops to frame and (co-)design our initial concept(s) / mobile app. Outputs from these workshops will be subsequently posted on the ‘In my Seat’ blog.

Nancy Hughes, Research Fellow, Human Factors Research Group, Faculty of Engineering, University of Nottingham